Published in Military Embedded Systems

Co-authored by Rich Jaenicke, Green Hills Software

While multicore processors offer designers of safety-critical avionics the significant benefits of smaller size, lower power, and increased performance, bringing those benefits to safety-critical systems has proved challenging. That’s due mainly to the complexity of validating and certifying multicore software and hardware architectures. Of principal concern is how an application running on one core can interfere with an application running on another core, negatively affecting determinism, quality of service, and – ultimately – safety.

Efforts to ease the safety-critical implementation of multicore processors are underway. Several standards have been updated to address multicore issues.

These include ARINC 653, which covers space and time partitioning of real-time operating systems (RTOSs) for safety-critical avionics applications. ARINC 653 was updated in 2015 (ARINC 653 Part 1 Supplement 4) to address multicore operation for individual applications, which it calls “partitions.” The Open Group’s Future Airborne Capability Environment (FACE) technical standard version 3.0 addresses multicore support by requiring compliance with Supplement 4. Additionally, the Certification Authority Software Team (CAST) – supported by the FAA, EASA, TCCA, and other aviation authorities – has published a position paper with guidance for multicore systems called CAST-32A. Together, these documents provide the requirements for successfully using multicore solutions for applications certifiable up to DAL A, the highest RTCA/DO-178C design assurance level for safety-critical software.

Benefits of multicore

The benefits of a multicore architecture are numerous and compelling:

Higher throughput: Multithreaded applications running on multiple cores scale in throughput. Multiple single-threaded applications can run faster by each running in their own core concurrently. Optimal core utilization enables throughput to scale linearly with the number of cores.

Better SWaP [size, weight, and power]: Applications can run on separate cores in a single multicore processor instead of on separate single-core processors. For airborne systems, lower SWaP reduces costs and extends flight time.

Room for future growth: The higher performance of multicore processors supports future requirements and applications.

Longer supply availability: Most single-core chips are obsolete or close to obsolete. A multicore chip offers a processor at the start of its supply life.

Challenges for multicore in safety-critical applications

In a single-core processor, multiple safety-critical applications may execute on the same processor by robustly partitioning the memory space and processor time between the hosted applications. Memory-space partitioning dedicates a nonoverlapping portion of memory to each application running at a given time, enforced by the processor’s memory management unit (MMU). Time partitioning divides a fixed-time interval, called a major frame, into a sequence of fixed subintervals referred to as partition time windows. Each application is allocated one or more partition time windows, with the length and number of windows being factors of the application’s worst-case execution time (WCET) and required repetition rate. The operating system (OS) ensures that each application is provided access to the processor’s core during its allocated time. To apply these safety-critical techniques to multicore processors requires overcoming several complicated challenges, the most difficult being interference between cores via the shared resources.

Interference between cores

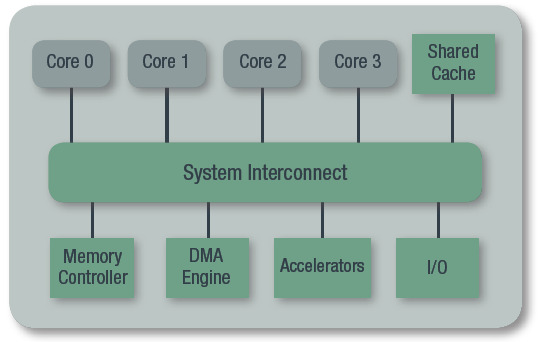

In a multicore environment, each processing core has limited dedicated resources. All multicore hardware architectures also include shared resources, such as memory controllers, DDR memory, I/O, cache, and the internal fabric that connects them (Figure 1). Contention results when multiple cores try to concurrently access the same resource. This situation means that a lower criticality application/partition could keep a higher criticality application/partition from performing its intended function. In a quad-core system, with cores only accessing DDR memory over the interconnect (i.e., no I/O access), multiple sources of interference from multiple cores have shown increases in WCET more than 12 times. Due to shared resource arbitration and scheduling algorithms in the DDR controller, fairness is not guaranteed and interference impacts are often nonlinear. In fact, tests show a single interfering core can increase WCET on another core by a factor of 8.

Figure 1: Seperate processor cores (in gray) share many resources (in green) ranging from the interconnect to the memory and I/O

CAST-32A provides certification guidance for addressing interference in multicore processors. One approach is to create a special use case based on testing and analysis of WCET for every application/partition and their worst-case utilization of shared resources. Special use case solutions, though, can lead to vendor lock and reverification of the entire system with the change of any one application/partition, making that approach a significant barrier to the implementation and sustainment of an integrated modular avionics (IMA) system. Without OS mechanisms and tools to support the mitigation of interference, sustainment costs and risk are very high. Changes to any one application will require complete WCET reverification activities for all integrated applications.

The better approach is to have the OS effectively manage interference based on the availability of DAL A runtime mechanisms, libraries, and tools that address CAST-32A objectives. This provides the system integrator with an effective, flexible, and agile solution. It also simplifies the addition of new applications without major changes to the system architecture, reduces reverification activities, and helps eliminate OEM vendor lock.

Read the full article here